🤖 AI-Generated Content

This content has been created using artificial intelligence. While we strive for accuracy, please verify important information independently.

There is a lot of talk these days about digital creations that look incredibly real, and one topic that has surfaced involves something called a "deepfake rima hassan." It's a rather serious matter when images or videos appear to show someone doing or saying things they never actually did, especially when that person is well-known. This kind of technology can, in fact, place anyone into a visual recording or picture they were never truly a part of, which is pretty unsettling for many people.

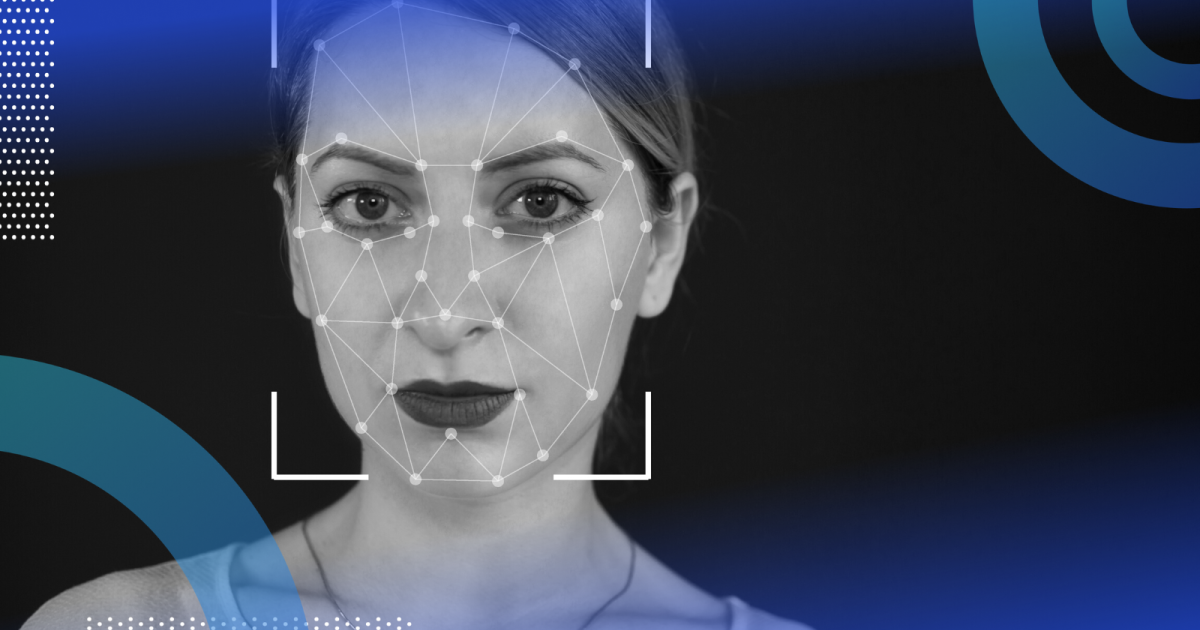

You see, a deepfake is a particular type of synthetic digital content. It involves taking a person from one image or video and swapping their likeness with someone else's. This kind of digital trickery uses a special form of artificial intelligence to make very believable fake pictures, moving images, and even sound recordings. The name itself describes both the advanced computer method and the result it produces, so it's a bit of a double meaning, you know.

Essentially, these fabricated media pieces, whether they are pictures, videos, or sounds, are made by artificial intelligence systems. They show things that simply do not exist in the real world. A deepfake specifically refers to digital content that has been changed to put one person's face or body onto another's. They are put together using a computer method called deep learning, which is where the "deep" part of the name comes from, naturally.

- What Happened To Kris Kross

- Alice Rosenblum Only

- Wasmo Telegram Link 2025

- Abby Berner Fanfix Leaked

- Is Jon Michael Hill Married

Table of Contents

- Rima Hassan - A Public Figure's Profile

- What Exactly Are Deepfakes and How Do They Work?

- The Technology Behind Deepfake Rima Hassan

- How Can We Spot a Deepfake Rima Hassan?

- Protecting Yourself from Deepfake Misinformation

- What Are the Wider Concerns Around Deepfake Technology?

- The Future of Deepfake Rima Hassan and Digital Identity

- What Steps Can Organizations Take Against Deepfake Threats?

Rima Hassan - A Public Figure's Profile

Rima Hassan is, for many, a familiar face in the public eye. She has made a name for herself through her work, which has often put her in the spotlight. Her activities and public statements have, in a way, shaped how people perceive her. Because of her visibility, she is, more or less, a person whose image and voice carry a certain weight in public discussions. It's really quite common for individuals who are often seen or heard to become subjects of all sorts of digital content, both real and, sadly, sometimes not so real.

Her role as a public figure means that her likeness is widely recognized. This recognition, while generally positive, also means that her image could potentially be used in ways she never intended. For instance, if someone wanted to create a convincing fake video, a well-known face like hers might be chosen precisely because people would instantly recognize it. That's just the way it goes with public recognition, you know. It comes with its own set of challenges, particularly in our very digital world.

Understanding a bit about who Rima Hassan is helps us grasp why her name might be connected to discussions about deepfakes. It highlights the fact that anyone with a public presence can, potentially, become a target for this kind of digital manipulation. So, it's not just about the technology itself, but also about the people it can affect, which is pretty important to think about.

- Nicholas Alexander Chavez Mexican

- Somali Wasmo Telegram 2025

- Kelly Paniagua Bio

- Kelly Paniagua Birthday

- Kairazy Cum

Personal Details and Background

| Full Name | Rima Hassan |

| Occupation | Public Advocate / Commentator |

| Known For | Public speaking, social commentary |

| Public Profile | Highly visible in media and online platforms |

What Exactly Are Deepfakes and How Do They Work?

Let's get down to what these deepfakes actually are. At their core, they are a kind of synthetic media. This means they are not original, true recordings of events. Instead, they are computer-generated. Think of it this way: a deepfake can perfectly place someone, like a famous person, into a video or a picture where they never actually were. It’s like a digital puppet show, but with real people’s faces, which is pretty wild, honestly.

More specifically, a deepfake involves a particular type of artificial media where a person in an image or a video has their appearance replaced with someone else's. So, if you see a video of a person speaking, and it turns out to be a deepfake, it means the face you are seeing might belong to someone else entirely, but it has been put onto the body of the person in the original video. This is done using advanced artificial intelligence, which is a bit like teaching a computer to be an incredibly skilled artist and mimic, you know.

This technology is a branch of artificial intelligence that creates very believable fake images, videos, and even sound recordings. The word "deepfake" itself describes both the method used to make these things and the finished product. So, when people talk about a deepfake, they could be talking about the computer programs that make them, or the actual fake video they just watched. It's a bit confusing, perhaps, but that's how the term functions.

These synthetic media pieces, including images, videos, and sounds, are made by artificial intelligence systems. They show something that does not exist in the real world. A deepfake, in simple terms, is media that has been changed digitally to swap one person's face or body with another's. They are put together using a technology called deep learning, which is a type of artificial intelligence. That’s how the name came about, you see. It's a pretty smart way to describe something that relies on very intricate computer learning.

A deepfake is a very sophisticated form of synthetic media. It uses artificial intelligence and machine learning methods to make or change audio, video, or pictures so they look convincingly real. This means the computer learns from lots of real examples to create something new that looks almost identical to reality. It's actually quite clever how the machines learn to do this, more or less mimicking human features and movements.

The Technology Behind Deepfake Rima Hassan

The creation of a deepfake, like one that might involve Rima Hassan, relies on something called deep learning. This is a specific part of artificial intelligence that works a lot like how humans recognize patterns. Think about how your brain learns to tell the difference between a cat and a dog; it sees many examples and picks up on the subtle cues. Deep learning models do something similar, but on a much larger scale, you know.

These artificial intelligence models are given thousands of pictures and videos of a particular person. So, if someone wanted to make a deepfake of Rima Hassan, the system would be fed a huge collection of her images and video clips. The computer then studies these examples very closely. It learns all the tiny details of her face, her expressions, how her mouth moves when she talks, and even the way her head might tilt. It’s a very thorough learning process, actually.

Once the system has analyzed all this data, it essentially builds a very detailed digital model of that person. This model can then be used to create new images or videos of the person, even if they were never in the original scene. For example, if you have a video of someone else speaking, the deep learning model can take Rima Hassan's digital face and put it onto that person's body, making it look like she is the one speaking. The goal is to make it so seamless that it's very hard to tell it's not real, which is pretty concerning, to be honest.

The more data the artificial intelligence has, the better it gets at making these fakes. This means that public figures, who often have a lot of their images and videos available online, are more susceptible to having deepfakes made of them. The sheer volume of content out there makes it easier for the computer programs to learn and replicate their likeness convincingly. So, in a way, their very public nature can be a bit of a vulnerability when it comes to this technology.

How Can We Spot a Deepfake Rima Hassan?

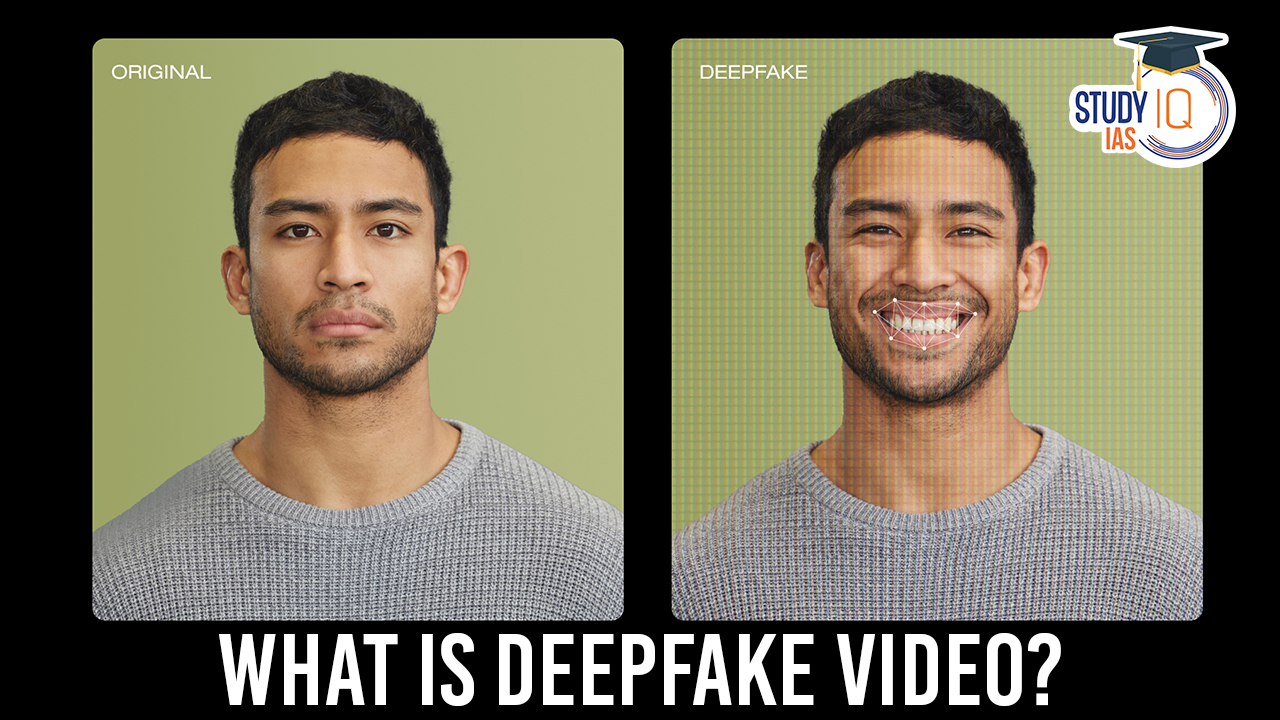

Knowing how deepfakes are made is one thing, but figuring out if something is fake, especially if it's a deepfake of someone like Rima Hassan, is another challenge entirely. The technology is getting better all the time, so it can be pretty tricky. However, there are still some things you can look for, some tell-tale signs that might give it away, you know.

One common sign can be in the face itself. Sometimes, the skin might look too smooth, or too plastic-like, or perhaps a bit blurry in certain areas while other parts are very sharp. Pay attention to the eyes; they might not blink naturally, or they might seem to look in odd directions. The area around the mouth can also be a giveaway. Does the mouth move in a way that matches the words being spoken? Sometimes, the words don't quite sync up with the lip movements, or the teeth might look strange or disappear altogether. These are subtle things, but they can make a difference, actually.

Another thing to consider is the lighting. Does the light on the person's face match the lighting in the rest of the video or picture? Often, in deepfakes, the lighting on the swapped face might look a little off, like it doesn't quite belong in that scene. Also, look at the edges of the face, especially around the hair or ears. Sometimes, these areas can have a fuzzy or distorted look, which is a pretty good indicator that something has been altered.

The sound can also be a clue. If it's a video with speech, listen closely to the voice. Does it sound completely natural? Are there any odd pauses or changes in tone that seem out of place? Sometimes, the audio quality might be different from the video quality, which could be a sign. And, of course, consider the context. Does what the person is saying or doing seem completely out of character for them? If it feels too shocking or unbelievable, it's worth a second, very close look.

It's about being a bit of a detective, really. You have to pay attention to the small details that your brain might otherwise overlook. The more you know about how these fakes are created, the better equipped you are to notice when something just doesn't feel right. So, if you come across something questionable involving a deepfake of Rima Hassan, these are some things to keep in mind, you know, to help you figure out what's real and what's not.

Protecting Yourself from Deepfake Misinformation

The original text mentions that with a better grasp of the technology, people in charge can take steps to shield themselves. This idea extends to all of us, especially when it comes to misinformation spread through deepfakes, including those that might feature someone like Rima Hassan. Protecting yourself is really about developing a healthy skepticism and some good habits, you know.

First off, always question the source. Where did you see this video or image? Was it on a reputable news site, or did it pop up on a less known social media account? Trustworthy news organizations usually have fact-checking processes in place. If it comes from an unverified source, it's probably best to be very cautious. Don't just believe something because you saw it online; that's a pretty important rule these days.

Consider the emotional impact of the content. Deepfakes are often made to provoke strong reactions – anger, fear, excitement. If something you see or hear makes you feel a very intense emotion right away, take a moment. That immediate emotional response can sometimes cloud your judgment. Step back, take a breath, and then apply your critical thinking skills. Is this designed to manipulate me? That's a good question to ask yourself, honestly.

Cross-reference information. If you see a video of Rima Hassan saying something surprising, check if major news outlets are reporting on it. Are there multiple, independent sources confirming the same information? If only one obscure site is talking about it, that’s a pretty big red flag. It’s like getting a second opinion, but for news, you know.

Also, be mindful of what you share. Spreading deepfakes, even unknowingly, contributes to the problem of misinformation. Before you hit that share button, take a moment to verify. A quick search or a look at the tips mentioned earlier can save you from accidentally spreading fake content. It’s a bit of personal responsibility, really, to help keep the digital world a little more truthful.

Lastly, stay informed about the technology itself. The more you understand how deepfakes are made and how they are evolving, the better you will be at spotting them. This ongoing awareness is a key part of protecting yourself from being misled by convincing fake media, like a deepfake of Rima Hassan. It’s an ongoing learning process, for sure.

What Are the Wider Concerns Around Deepfake Technology?

The original information mentions that deepfakes can be used to mess with and even threaten individuals and businesses. This is a very serious part of the discussion. The ability to create seemingly real but totally fake content has some pretty big implications for society as a whole, you know. It's not just about a single deepfake of Rima Hassan; it's about the bigger picture.

One major worry is the harm to someone's good name. Imagine a deepfake showing a public figure, or even an everyday person, doing something scandalous or saying something awful. This fake content could quickly spread, damaging their reputation, perhaps even ruining their career or personal life. The damage can be very hard to undo, even after the truth comes out. It’s a bit like a digital smear campaign, but incredibly effective because it looks so real.

There's also the concern about financial scams. A deepfake could be used to impersonate a CEO or a high-ranking executive, perhaps giving instructions to transfer large sums of money. If the voice and face look and sound exactly like the real person, employees might fall for it. This could lead to huge financial losses for companies. It’s a very clever way for bad actors to trick people, honestly.

In politics, deepfakes could be used to spread false information about candidates or political events. Imagine a fake video of a politician making controversial statements they never uttered, just before an election. This could sway public opinion and even affect election results. It undermines trust in what we see and hear, which is pretty fundamental to a healthy democracy, you know.

Beyond that, there's the broader issue of trust. If people can no longer tell what's real and what's fake, it creates a general sense of doubt about all digital media. This makes it harder for people to believe genuine news or even personal videos. It erodes the shared understanding of reality, which is a very unsettling prospect. So, the implications go far beyond just individual incidents like a deepfake of Rima Hassan; they touch on how we perceive truth itself.

Finally, there's the personal distress it can cause. Being the subject of a deepfake can be incredibly upsetting and violating. It's a loss of control over one's own image and identity. For individuals, this can lead to significant emotional harm. So, while the technology itself is neutral, its potential for misuse carries a lot of negative weight, you see.

The Future of Deepfake Rima Hassan and Digital Identity

As the technology behind deepfakes keeps getting better, the line between what's real and what's fake is becoming blurrier. This means that in the future, it will likely be even harder to tell if a video or image, like one featuring Rima Hassan, is genuine or a clever fabrication. The systems that create these fakes are constantly learning and improving, making their output more and more convincing, which is pretty unsettling, honestly.

This ongoing improvement poses a significant challenge for our digital identities. Our digital identity is essentially how we are represented online through our photos, videos, and interactions. If someone can easily create fake content that looks just like us, it means we have less control over our own online presence. For public figures, whose digital identities are so intertwined with their careers, this is a very serious concern. It's almost like losing ownership of your own face and voice, you know.

The future might see a kind of "arms race" between those who create deepfakes and those who try to detect them. As detection methods get better, the deepfake technology will adapt to bypass them, and so on. This continuous back-and-forth means that staying informed and developing critical viewing skills will become even more important for everyone. It's going to require constant vigilance, in a way.

There's also the potential for deepfakes to become more personalized. Imagine a deepfake that isn't just about a public figure, but about an everyday person, perhaps tailored to a specific individual to trick them. This level of customization could make them even more dangerous. So, the threat might not just be about widespread misinformation, but also about very targeted deception, which is a pretty scary thought.

On the flip side, there are efforts to develop technologies that can authenticate media, proving that it hasn't been altered. This could involve digital watermarks or other forms of verification built into cameras and recording devices. The hope is that we can create a system where genuine media is clearly marked as such, making it easier to distinguish from fakes. This is a big area of research, and it's something that could really help protect people like Rima Hassan from unauthorized deepfake content.

Ultimately, the future of deepfakes and digital identity will likely depend on a combination of technological solutions, public education, and perhaps even new laws or regulations. It's a complex issue that will require many different approaches to manage effectively. So, it's not just a technical problem; it's a societal one, too.

What Steps Can Organizations Take Against Deepfake Threats?

The original text makes a point that with a better grasp of this technology, executives can take measures to keep themselves safe. This applies not just to individuals but also to entire organizations, especially given the potential for deepfakes to cause significant harm. Companies and other groups need to be proactive in addressing this challenge, you know, rather than waiting for an incident to happen.

One very important step is to educate employees. People within an organization should learn what deepfakes are, how they are created, and what they look like. This training can help staff recognize suspicious content, whether it's an email with a deepfake audio attachment or a video that seems to show a colleague saying something odd. Awareness is, perhaps, the first line of defense. It's about empowering everyone to be a bit more vigilant, you see.

Organizations should also have a clear plan for what to do if a deepfake incident occurs. This is often called an incident response plan. It outlines who needs to be informed, how to investigate the

Additional Resources

Visual Content

Disclaimer: This content was generated using AI technology. While every effort has been made to ensure accuracy, we recommend consulting multiple sources for critical decisions or research purposes.